The Decoy Effect: How a Third Option Can Steer Consumer Decisions

When thinking of conversion rate optimization, people often jump right to page design; however, the psychology behind decision-making often plays an...

Every CMO is chasing the same question underneath all the dashboards and attribution reports: which parts of my marketing actually create new demand, and which parts just capture what would have happened anyway?

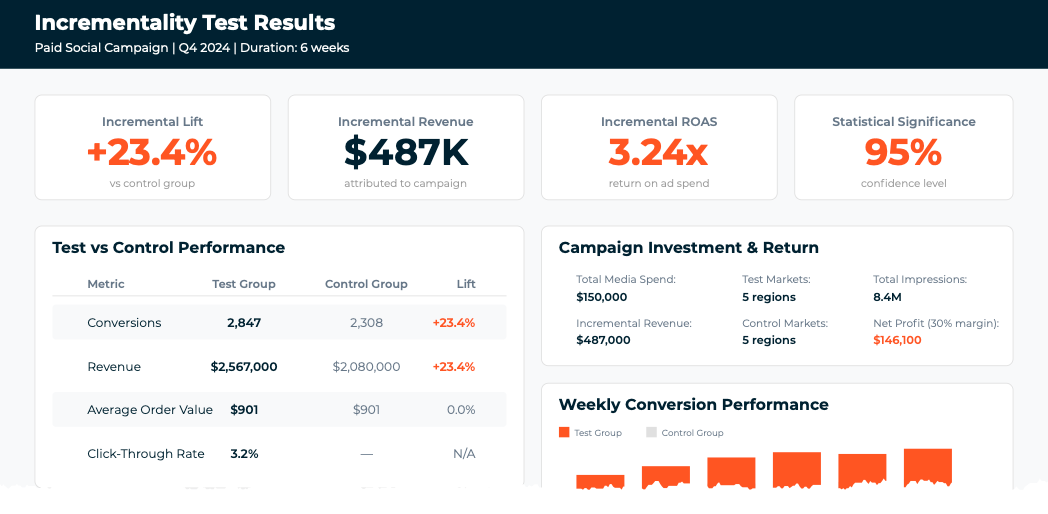

Incrementality experiments answer that question directly. They compare people who saw your marketing against people who didn't, so you can see what your spend actually created.

Incrementality is the additional value a specific channel, campaign, or tactic creates beyond what would have occurred without it. These experiments compare exposed and unexposed groups so you can measure that lift with confidence.

Instead of asking 'how many conversions did this get,' incrementality asks 'how many of these conversions only happened because we did this. '

The outputs are metrics like incremental conversions, incremental revenue, and incremental ROAS. These give you a more accurate read on effectiveness than raw or attributed numbers.

At this point, you may be thinking, "we already do A/B tests, so we're good". However, A/B tests and incrementality experiments answer different questions.

A/B tests compare versions. You show half your audience one creative and half another, then see which performs better. This tells you which option wins, but not whether either option created demand that wouldn't have existed otherwise.

Incrementality experiments compare exposure to no exposure. You show one group your marketing and keep another group clean, then measure the difference. This tells you whether the activity itself added value.

Both matter. A/B tests help you optimize execution. Incrementality experiments tell you whether the investment is worth it in the first place.

Modern measurement has gotten noisy. Privacy changes, walled gardens, and AI-driven media buying have all made traditional attribution less reliable on its own. Incrementality experiments give you a reference point for what's actually working, so you can make budget decisions with more confidence.

This approach is more trustworthy because you're running a controlled experiment rather than trying to reverse-engineer what actually drove the result.

For CMOs, this means clearer answers to strategic questions: which channels to scale, what to cut, and how aggressively to invest in new platforms or journey-based campaigns focused on awareness, evaluation, etc.

Most incrementality experiments follow the same basic pattern, whether you're testing a paid social campaign, an email program, or a connected TV buy.

Start with a hypothesis.

You need something specific and testable: what you're testing, what outcome you expect, and a timeframe. Something like: "Over the next six weeks, running paid social in these five markets will drive more purchases than matched markets where we don't run it."

Vague hypotheses like "brand advertising helps drive sales" don't work. There's no specific tactic, no measurable outcome, and no timeframe. Even if the results came back positive, you wouldn't know what to do next.

Set your KPIs.

Decide what you're measuring upfront, along with any secondary metrics you want to watch.

Create treatment and control groups.

Split by audience or geography. Only the treatment group sees the marketing activity. The control group stays clean. For many paid media tests, geographic splits are the most practical approach since you can simply run ads in some markets and not others.

Measure and compare.

Track results for both groups over the test window, then calculate the difference to estimate incremental lift and ROI.

Good test design also accounts for seasonality and sample size. You want to detect meaningful changes, not chase random swings.

Different experiment types work better for different channels, privacy constraints, and funnel stages.

Audience-level holdout tests randomly split individuals into exposed and holdout segments. This works well anywhere you can split audiences cleanly, such as email, display, and paid social.

Geo-lift and matched-market tests assign regions, stores, or markets to test or control. This is your best option when user-level identifiers are limited or when you're testing large media investments like TV or OOH.

Channel or tactic on/off tests turn entire channels or tactics on in some markets or periods and off in others. This helps you understand whether they add value on top of your always-on activity.

Some marketing teams pair these experiments with marketing mix models, using incrementality results to calibrate broader models and improve their predictive power.

The value of incrementality experiments comes from how the insights change strategy, not just from the lift numbers themselves. When test results become inputs to planning and budgeting, experimentation becomes a real advantage.

High-lift channels get more budget and creative attention. Low-lift or non-incremental programs get reduced, redesigned, or cut.

Results feed back into segmentation, creative, and media decisions. Over time, this builds key knowledge about what works for your brand across markets or under different conditions.

For most marketing organizations, the challenge is focus and execution, not a lack of tools. A few choices determine whether incrementality experiments become useful or just another initiative that fades.

Start from decisions, not data.

Choose tests that inform upcoming planning moments, key channel bets, or major creative shifts. The insights should be immediately actionable.

Design for feasibility.

Make sure you have enough volume, time, and clean separation between test and control. Don't over-complicate execution. Getting these inputs right requires some math upfront. This is often where a partner can help, making sure your test is set up to produce results you can actually trust.

Build simple governance.

Standardize how hypotheses are written, how results are reported, and how often leadership reviews learnings. Make it part of how you operate, not a one-off event.

Brands that get value from incrementality often start with a handful of high-impact tests, figure out what works operationally, and then expand from there.

Many teams understand incrementality in theory but struggle to make it an ongoing practice that fits their resources.

Mighty Roar can audit your existing measurement and media mix to identify where attribution is likely over- or understating impact, then prioritize experiments tied to your biggest strategic questions.

We can design and run incrementality experiments across channels and geographies—handling test design, group selection, and reporting so your team can focus on learning rather than statistics.

We also help connect the learnings to your planning cycles, bringing experiment insights into campaign briefs, budget decisions, and ongoing reporting. The goal is to make incrementality a capability you keep, not a one-time effort.

Most tests run 4-8 weeks, depending on your conversion cycle and traffic volume. You need enough time to collect meaningful results and account for normal fluctuations. Rush it, and you're measuring noise instead of signal.

Attribution assigns credit to touchpoints in a customer's journey. Incrementality tests whether the marketing created the conversion in the first place. Attribution tells you where conversions came from. Incrementality tells you if you actually caused them.

Yes, but you need enough volume to detect a meaningful difference between test and control groups. Email and geo-targeted paid social work well for smaller budgets. National TV or brand awareness campaigns require more scale to get clean results.

Use A/B tests to optimize creative, messaging, or landing pages. Use incrementality tests to decide whether a channel, campaign, or tactic is worth the investment. A/B testing answers "which version wins." Incrementality answers "should we do this at all."

Email, paid social, display, and geo-targeted media (TV, OOH, radio) all work well because you can control exposure cleanly. Search is harder since you can't easily prevent someone from seeing your ad. Owned channels like email give you the cleanest test setup.

You need enough sample size and a large enough difference between groups. Most platforms calculate this automatically. If your test shows a 2% lift but your margin of error is ±5%, you don't have a real answer yet—you need more time or volume.

No. The control group doesn't see the test activity, but they still see everything else you're running. You're only measuring the incremental impact of the specific thing you're testing, not your entire marketing mix.

Sign up for our monthly newsletter to receive updates.

When thinking of conversion rate optimization, people often jump right to page design; however, the psychology behind decision-making often plays an...

For some marketing teams, segmentation is seen as a dirty word. Brand managers may see their product or service as having universal appeal and may be...

Another year down, which means it's time for marketers everywhere to shift from execution mode to evaluation mode.